Optimization within a neural network is an essential component for ensuring high performances. Optimization is the process of adjusting the weights and biases of the neural network during back propagation to improve the result of the next attempt, also known as trying to reduce the cost function, which is a measure of how the network is performing its particular task. When creating a neural network it’s vital to consider the task the network will be performing and the computational resources available in order to best select an optimization method for the task the network will be executing.

Problems:

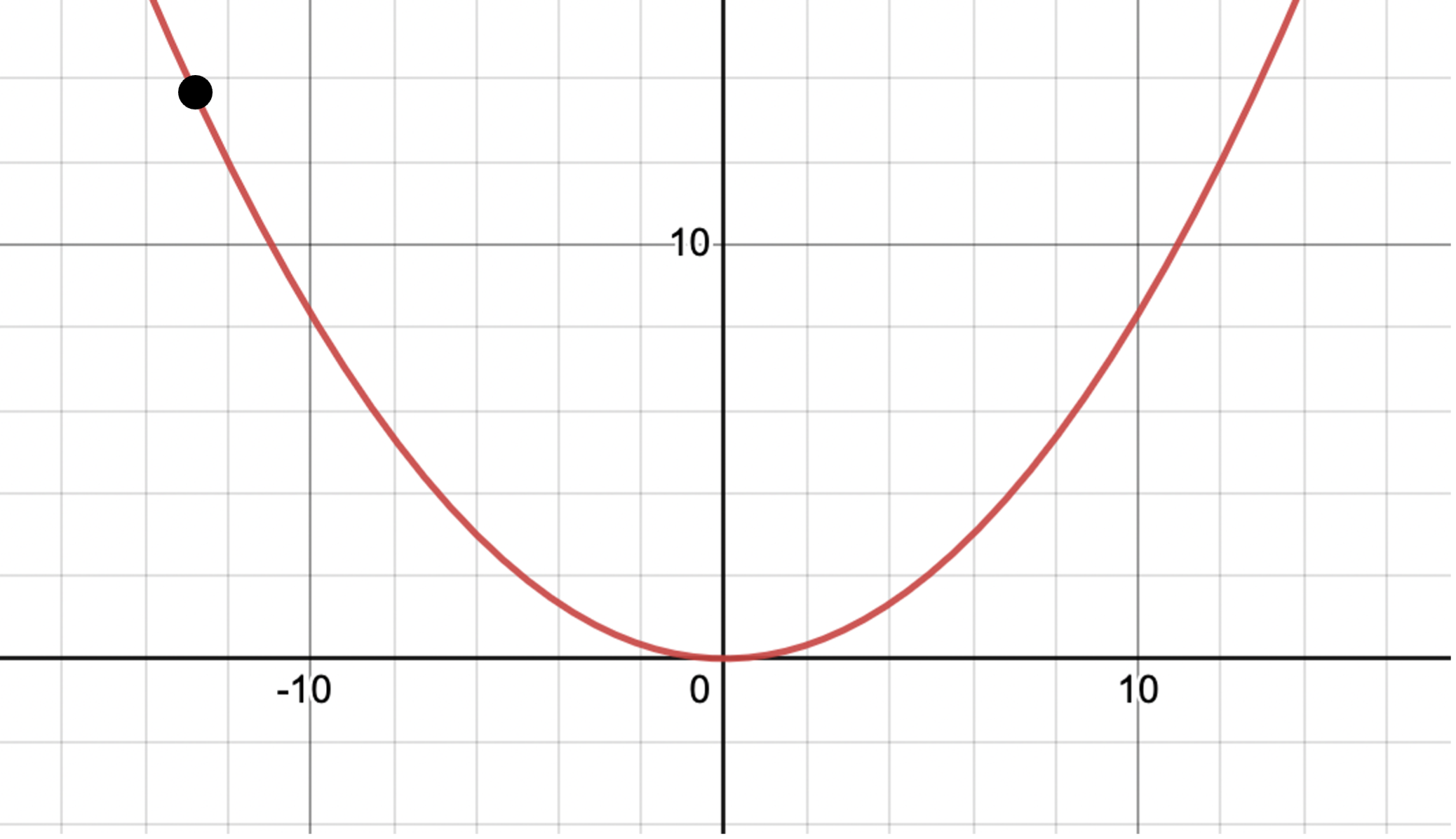

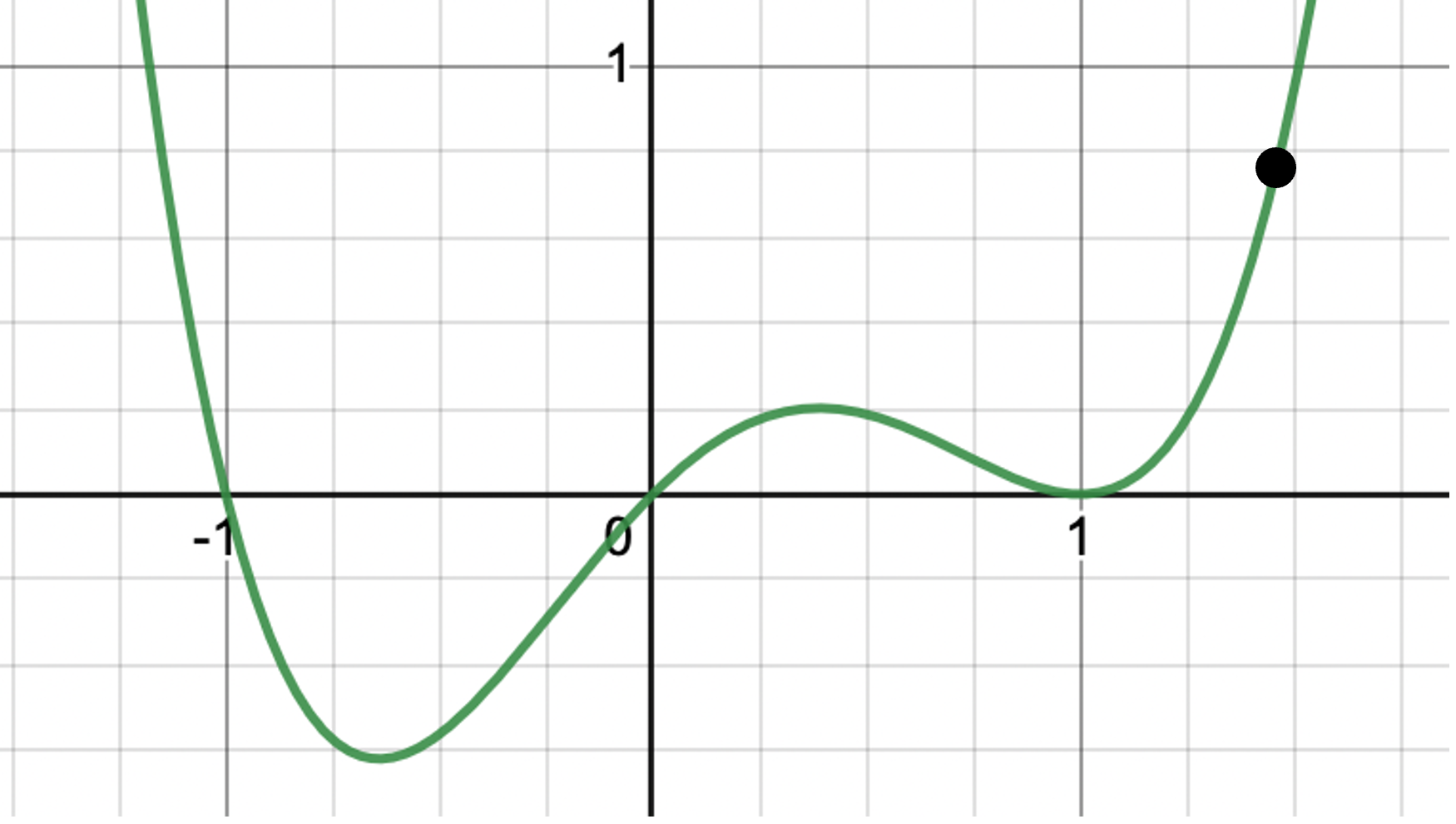

A simple and common example of optimization is gradient descent. This is an approach that uses calculus to find the gradient of the slope then moves each value ‘down’ the slope to a lower position (lower ‘cost’). Below is an over simplified 2-dimensional example:

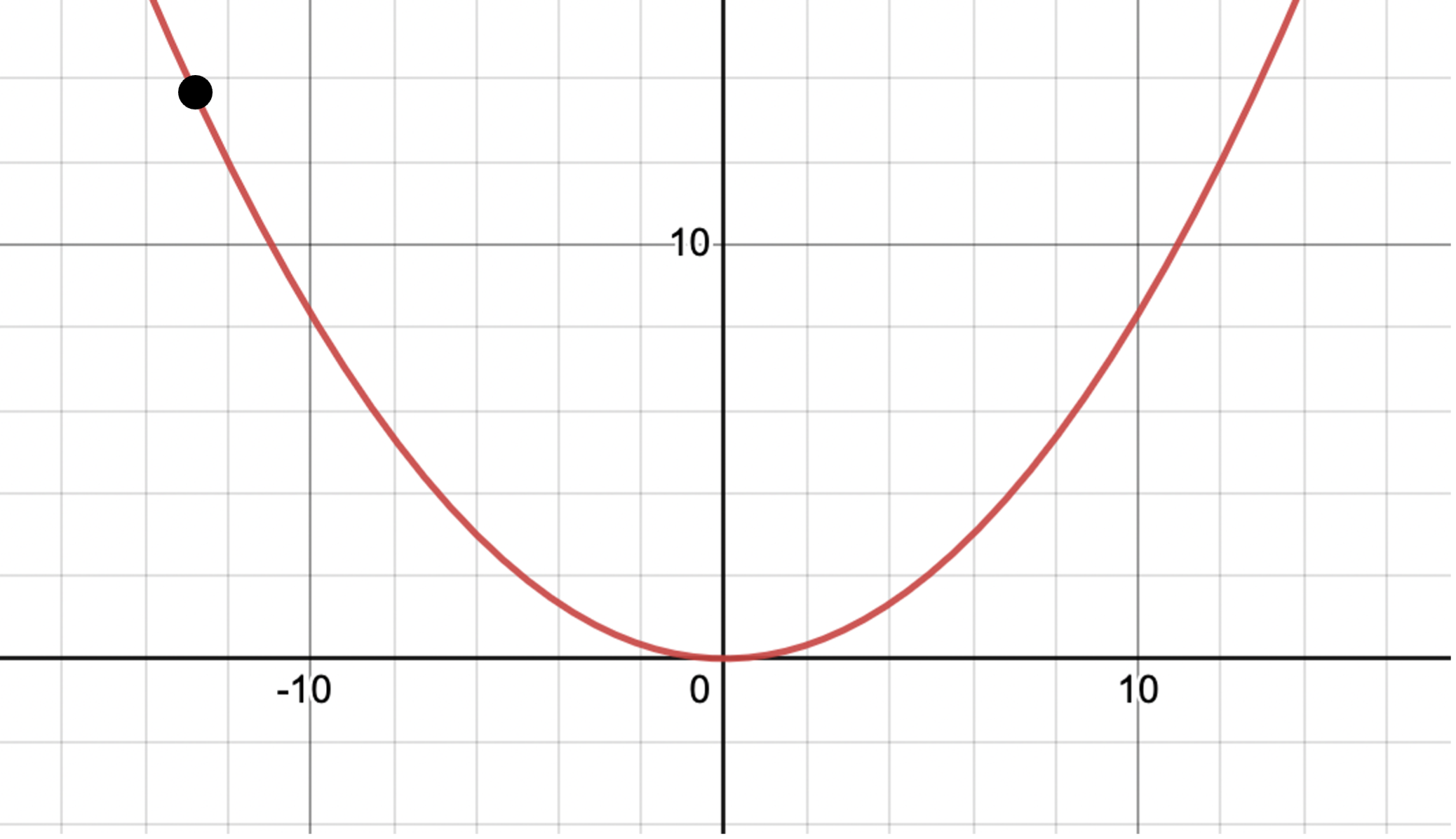

However, when presented with another example slightly more representative of the real world, we encounter an issue:

Here it is apparent that the value has missed the global minima and has fallen into a ‘local trap’, where the optimizer has found the ‘lowest’ point of cost compared to its surroundings, however it is not the lowest point within the whole search space. This is a key problem with many optimization methods when the search space is large.

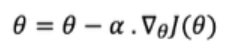

The learning rate of a network determines how large the distance is that the weight travels which each update. The local trap problem can be slightly mitigated in gradient descent by using different learning rates. If the learning rate is high, the weight value may ‘jump’ out of or skip local minimas. This type of optimization is best suited for very simple operations as it becomes insufficient when number of parameters become too large.

ADAM (adaptive moment estimation) is highly regarded as the best optimization method, it is a combination between Mini batch gradient descent + momentum and RMSprop, both originating from simple gradient descent. The ADAM approach has a small amount of tuning parameters and allows for high quality optimization while maintaining low computation time and costs by using adaptive learning rates.

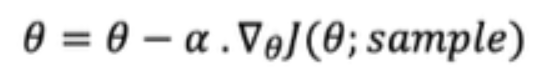

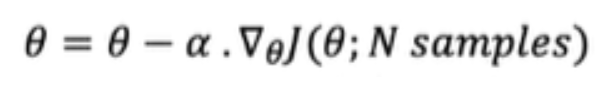

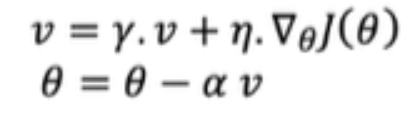

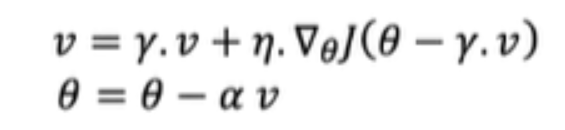

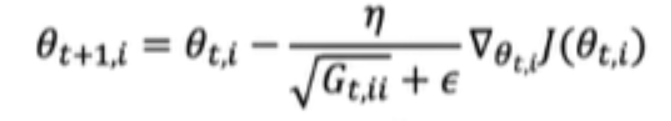

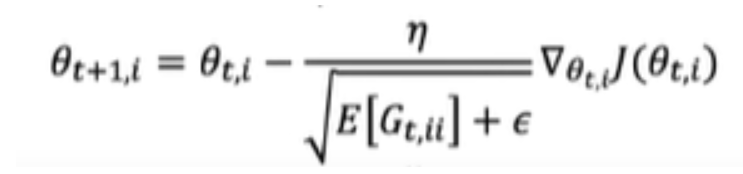

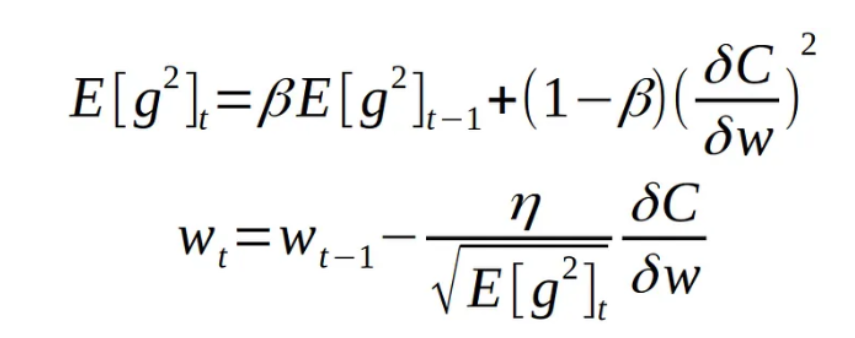

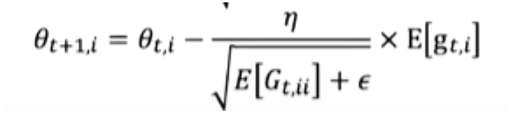

Step by step evolution of gradient descent to ADAM:

“E[g] - moving average of squared gradients. dC/dw -gradient of the cost function with respect to the weight. N - learning rate. Beta - moving average parameter (good default value - 0.9)”

Timeline of ADAM optimizer evolution and Equations provided by CodeEmporium.

Reference: https://www.youtube.com/@CodeEmporium

As a mix of two optimization techniques, ADAM combines the best features of both to allow it to tackle advanced problems with a very high success rate without being limited to capacity, memory requirements, noise and several other factors that may reduce the effectiveness of other optimizers.

Reference: https://arxiv.org/pdf/1412.6980.pdf

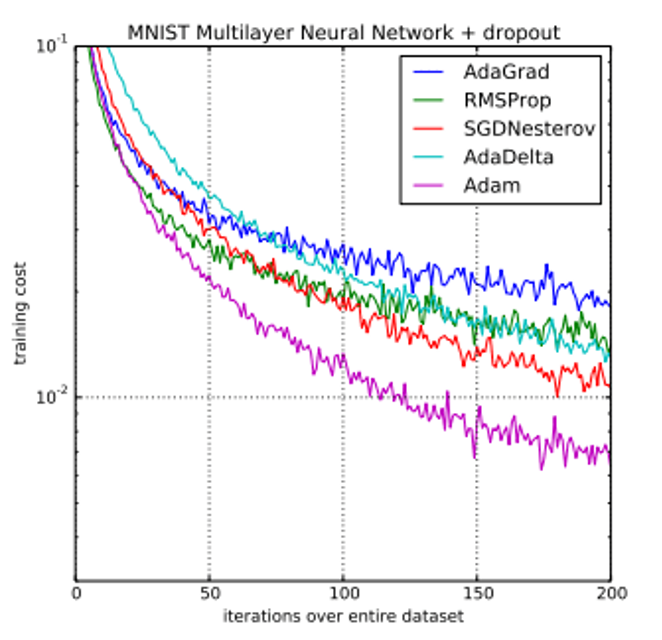

In comparison to the original gradient descent it is clear to see ADAM is much more sophisticated and overcomes many of the challenges, although it still occasionally suffers from falling into local traps, this risk is reduced. Compared to other well-established optimizers, its success is clear to see:

Reference: https://arxiv.org/pdf/1412.6980.pdf

While ADAM is very successful in several areas, it is not the best option for all tasks. For example genetic algorithms perform better in high dimensional problems.

Reference: https://link.springer.com/article/10.1023/A:1022626114466

The application of neural networks within the field of Biomedical Engineering is almost endless. With the use of advanced optimization techniques, like the discussed ADAM optimization, the use of neural networks is becoming more practical in replacing previously human problem solving tasks. This opens up huge doors within medicine regarding accessibility and reliability of data analysis, both with patient oriented testing such as MRIs and biopsies, and research into patient conditions and new medicines.

The new accessibility of powerful neural networks will allow for significantly more research and testing done globally, therefore introducing a new age of advancement in biology, medicine and engineering.